🎯 Purpose:The robots.txt file guides search engine crawlers (Googlebot, Bingbot, etc.) on how to access your website.

📌Robots.txt Guide: Control Search Engine Crawlers Efficiently

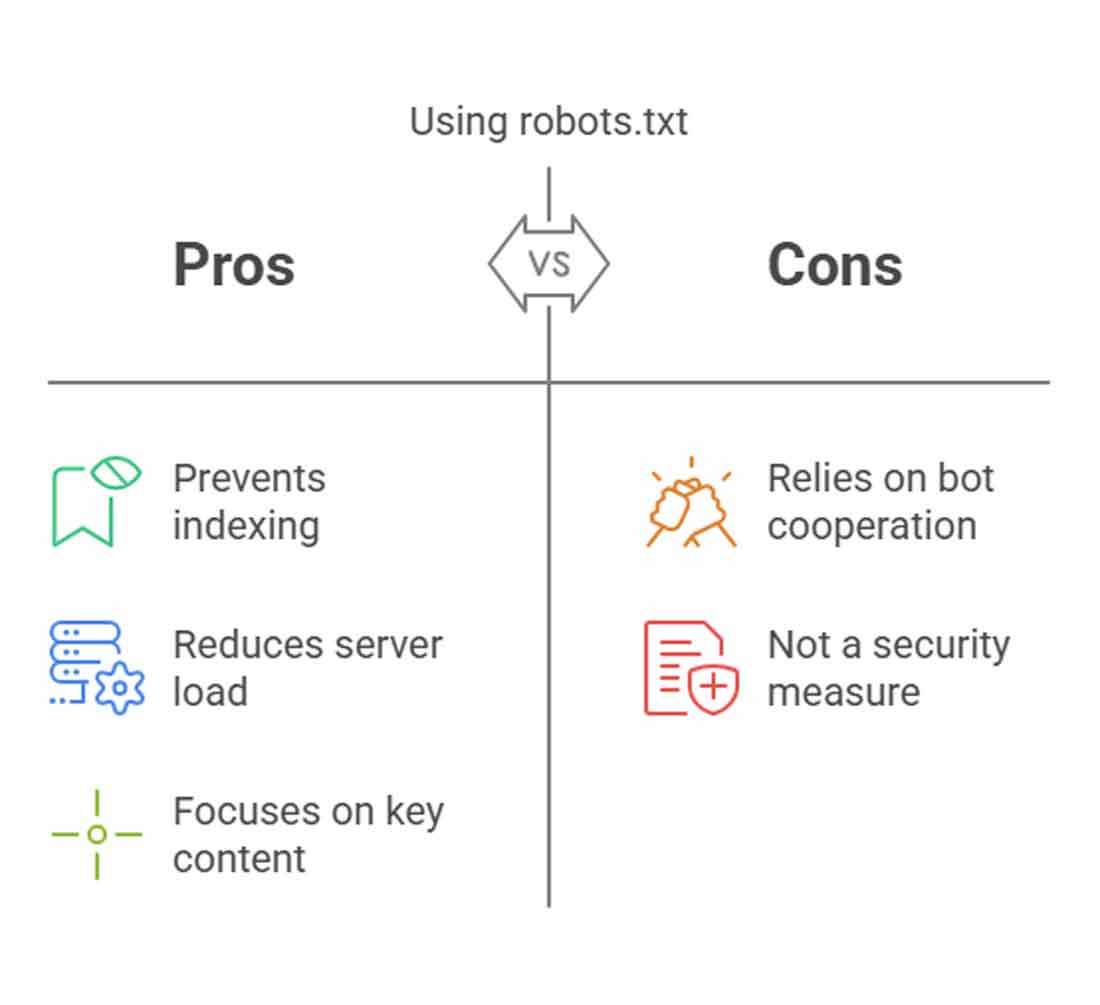

Common uses

Prevents🤫 indexing of specific pages (e.g., admin panel, private sections)

Reduces 📉 server load

Focus 🎯 bots on key content

⚠️ Important: Relies on bot cooperation! Not a security measure against malicious bots. 🛡️🚫

📍 Location of robots.txt

The robots.txt file must be placed in the root directory of your website.

📂 Example Path:

https://example.com/robots.txt

🚨 Important:

❌ If placed in a subdirectory (https://example.com/folder/robots.txt), it won’t work!

✅ Always ensure it’s accessible via https://yourdomain.com/robots.txt.

⚠️ If the robots.txt file is not in the root directory, robots will generally ignore it.

📌 General Structure and Directives of robots.txt

A robots.txt file consists of rules that control which parts of a website robots can access. 🤖📜

🔹 User-agent Directive

Specifies which bot the rule applies to:

🛠️ User-agent: * → Applies to all robots.

🔍 User-agent: Googlebot → Applies only to Google’s web crawler.

🔎 User-agent: Bingbot → Applies only to Bing’s web crawler.

🚫 Disallow Directive

Blocks bots from crawling specific pages or directories:

❌ Disallow: /private/ → Blocks access to /private/.

❌ Disallow: /temp.html → Blocks access to /temp.html.

⚠️ Disallow: / → Blocks entire website! Use with caution! 🛑

✅ Allow Directive

Overrides Disallow and lets bots crawl specific content:

✅ Allow: /public/allowed.html → Lets Googlebot crawl /public/allowed.html even if /public/ is blocked.

📢 Note: Not all bots support Allow, but Googlebot does!

🗺️ Sitemap Directive

Helps search engines find important pages:

📍 Sitemap: https://www.example.com/sitemap.xml

Example robots.txt File

# 🔹 User-agent Directive User-agent: * # Applies to all robots User-agent: Googlebot # Applies only to Google’s web crawler User-agent: Bingbot # Applies only to Bing’s web crawler # 🚫 Disallow Directive Disallow: /private/ # Blocks access to /private/ Disallow: /temp.html # Blocks access to /temp.html Disallow: / # Blocks entire website! ⚠️ Use with caution! # ✅ Allow Directive Allow: /public/allowed.html # Lets Googlebot crawl /public/allowed.html even if /public/ is blocked # 🗺️ Sitemap Directive Sitemap: https://www.example.com/sitemap.xml

#SEO#SearchEngineOptimization#WebsiteOptimization#RobotsTXT#BotManagement#Crawling

#Indexing